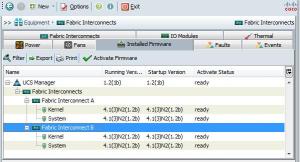

UCS Code Upgrade Success: Running 1.2(1b) Now!!

I have been blogging for a while about the planned code upgrade to our production UCS environment for a while now and we finally cut over to 1.2(1b) on all the system components! Success. Here is a quick run down.

We decided to go with the 1.2(1b) code because the main difference between it and 1.1(1l) was the support for the new Nehalem CPU that will be available in the B200 and B250 M2 blades in about a month. We want to start running with these new CPUs this summer; more cores means more guests and lower cost.

The documentation from Cisco on the process was pretty good and provided great step by step instructions and what to expect. We followed it closely and did not have any issues, all worked as expected.

Here is how we did it:

First step was to perform a backup of the UCS configuration (you always want a fall back, but we did not need it).

We started with upgrading the BMC on each blade via the Firmware Management Tab; this does not disrupt the servers and was done during the day in about 30 minutes. We took it slow on the first 8 BMC and then did a batch job for the last 8.

At 6 PM we ran through the “Prerequisite to Upgrade . . .” document a second time to confirm all components were healthy and ready for an upgrade; no issues. Next we confirmed that all HBA multipath software was healthy seeing all 4 paths as well as confirmed NIC teaming was healthy; no issues.

At 6:30 PM we pre-staged the new code on the FEX (IO modules) in each chassis. This meant we clicked “Set Startup Version Only” for all 6 modules (2 per chassis times 3). Because we checked the box for “Set Startup Version Only” there was NO disruption of any servers, nothing is rebooted at this time.

At 6:50 PM we performed the upgrade to the UCS Manager software which is a matter of activating it via the Firmware Management tab. No issues and it took less than 5 minutes. We were able to login and perform the remaining tasks listed below when it was complete. Note, this step does NOT disrupt any server functions, everything continues to work normally.

At 7:00 PM, it was time for the stressful part, the activation of the new code on the fabric interconnects which results in a reboot of the subordinate side of the UCS system (or the B side in my case). To prepare for this step we did a few things because all the documentation indicated there can be “up to a minute disruption” of network connectivity (it does NOT impact the storage I/O; fiber channel protocol and multipath takes care of it) during the reboot. This disruption is related to the arp-cache on the fabric interconnects I believe, here is what we experienced.

UCS fabric interconnect A is connected to the 6513 core Ethernet switch port group 29. UCS fabric interconnect B is connected to the 6513 core Ethernet switch port group 30. During normal functioning the traffic is pretty balanced between the two port groups about 60/40.

My assumption was that when the B side goes down for the reboot, we would flush the arp-cache for port group 30 and then the 6513 will quickly re-learn all the MAC addresses now reside on port group 29. Well, it did not actually work like that . . . when the B side rebooted the 6513 cleared the arp-cache on port group 30 right away on its own and it took about 24 seconds (yes I was timing it) for the disrupted traffic to start flowing via port group 29 (the A side). Once the B side finished its reboot in 11 minutes (the documentation indicated 10 mins.) traffic automatically began flowing through both the A and B sides again as normal.

So what was happening for the 24 seconds? I suspect it was the arp-cache on the A side fabric interconnect knew all the MACs that were talking on the B side so it would not pass that traffic until it timed out and relearned.

As I have posted previously we run our vCenter on a UCS blade using NIC teaming. I had confirmed vCenter was talking to the network on the A side, so after we experienced the 24 second disruption on the B side I forced my vCenter traffic to the B side before rebooting the A side. This way we did not drop any packets to vCenter (did this by disabling the NIC in the OS that was connected to the A side and let NIC teaming use only the B side).

This approach worked great for vCenter, we did not lose connectivity when the A side was rebooted. However, I should have followed this same approach with all of my ESX hosts because most of them were talking on the A side. The VMware HA did not like having the 27 second disruption and was confused afterwards for a while (however, full HA did NOT kick in). All of the hosts came back, as well as all of the guests except for 3. 1 test server, 1 Citrix Provisioning server and 1 database server had to be restarted due to the disruption in network traffic (again the storage I/O was NOT disrupted; mutlipath worked great).

Summary:

Overall it went very well and we are pleased with the results. Our remaining tasks are to apply the blade bios updates to the rest of the blades (we did 5 of them tonight) using the Service Profile policy — Host Firmware Packages. These will be done by putting each ESX into Maintenance Mode and rebooting the blade. It takes about 2 utility boots for it to take effect or about 10 minutes each server. Should have this done by Wednesday.

What I liked:

— You have control of each step, as the admin you get to decide when to reboot components.

— You can update each item one at a time or in batches as your comfort level allows.

— The documentation was correct and accurate.

What can be improved:

— Need to eliminate the 24 to 27 second Ethernet disruption which is probably due to the arp-cache. Cisco has added a “MAC address Table Aging” setting in the Equipment Global Policies area, maybe this already addresses it.

Evolving Healthcare IT and how do you adapt?

To prep for the Cisco panel discussion, Ben Gibson asked what my role was at my organization. The answer was something like “it started out as managing the network infrastructure: the cabling, LAN/WAN, servers, etc. Then it continued to evolve into virtualization and storage to where I am now.” So I now refer to my role as the manager of technology infrastructure. What Ben said after my explanation has really got me thinking . . . he simple said something like “oh, just like the way the industry has evolved”.

Ben is right. In my healthcare organization my role and responsibilities evolved overtime as the technologies changed. Based on our IT department size this was partly due to having a small head count and needing to do more with the staffing you already had in place. This approach can be challenging at times but also lends itself to being creative, expanding your knowledge by trying and doing new things which in the end can be down right fun at times (the opposite can also be true :)).

The benefits our organization has gain from the way we have been able to consolidate and manage the different staffing roles as we have changed technologies has made us more flexible and able to move forward. I am not saying this is easy and does not take work to make it happen but looking back at how we got to where we are has made me think about it.

As I have been meeting and talking with people from other organizations there is a wide range of comfort, adoption and acceptance with industry change. For example, we gained the comfort level with VMware DRS and vMotion very early on and have been allowing VMware to decide where a server workload should reside by allowing it to automatically moving the servers between hosts. I am surprised when I hear of others who still “balance” the VM workloads by checking DRS recommendations manually and then vMotioning the workload. Or worse, talking to a network switch vendor’s “virtualization expert” and hearing him say virtualization is still in its infancy; that comment/belief saved me time in the end and made my investigation with that vendor shorter.

To take the thoughts further, one of the keys to reducing costs, complexity and increasing your flexibility is when you really start to converge and maximize your datacenter resources. It becomes very difficult to realize these savings and efficiencies if your staff/groups/teams do not work together. You need to ask yourself, does my storage team talk with my server team? Does the network admin know what our virtualization guy is doing? In the organizations where I have seen a storage group that works in their own bubble, that organization begins to struggle by spinning their wheels and wastes resources and dollars.

So why this topic for a blog post? Yes, my team and organization get to use a lot of cool and exciting technology that makes our jobs fun at times, saves the organization time and money and has made us flexible and agile, but it did not just happen. You have to change as the technology changes, if you can do that you will be on the right track.

Disclaimer: No workplace is perfect and my organization is far from perfect, but it is pretty damn good to be here.

Addendum: My director read this post and reminded me “It’s certainly worthy of bringing that weakness (poor communication) to light. If you think about it we unfortunately have some of the same symptoms.”

That is true, communication is always a work in progress whether it is in professional or personal life, there is always room for improvement!

San Jose: That’s a Wrap

Well that was a great day! When I was invited to represent my organization at the Cisco Datacenter Launch to talk about our UCS experience I was humbled, excited and nervous. However, it is not often in someone’s career to have the opportunity to be included on a panel with such innovative leaders in the technology industry as David Lawler, Soni Jiandani, Boyd Davis and Ben Gibson. Everyone was down to earth, personable and very comfortable to work with on the panel. The goal was to make the event a relaxed discussion and the point of view of the customer was truly important to the panel and Cisco. I was also amazed at how many people pull the details together for an event of this nature. Lynn, Janne and Marsha were great making sure I was prepared and helped make everything go off smoothly.

This customer focus continued to be evident after the video was completed. I was able to spend the rest of the day with many key individuals, who made time for me, from the UCS business unit. We had some deep technical discussions on various topics like firmware upgrades, wish lists, directions, ease of use vs. levels of control, etc. I was asked by everyone for input regarding ways to improve as well as talking about how we are using the system.

To end my day on the Cisco campus, David Lawyer invited me to his office to meet with him and Mario Mazzola, Senior Vice President of Server and Virtualization Business Unit (SAVBU). Mario has been a key technology person in Silicon Valley, leading the creation of the 6500 switch product, the Cisco MDS fiber channel product and now the Cisco UCS platform (along with many other accomplishments). I think it is fair to say he is a legend in the industry (however, my impression is he is very humble and quickly acknowledge’s others for their contributions to the projects). We had a conversation focused on the customer views related to the product and how it is Cisco’s goal to continual improve the system. Mario and David are very down to earth people and it was clear to me Cisco is very customer focus from the top of the organization down.

So that’s a wrap for this trip to Cisco in San Jose for now . . .

San Jose: Cisco Datacenter 3.0 Launch

Cool things are happening . . . check out the site for Cisco Datacenter 3.0.

http://www.cisco.com/web/solutions/data_center/dc_breakthrough.html

My organization was invited to participate in a customer case study around the success we have had with Cisco UCS in a production environment. The written and video case study are now available on Cisco’s web site and is featured on the main page that outlines the new addition and innovations coming for the datacenter.

Tomorrow, April 6, 2010 there will be the offical launch at 1 PM EST and can be viewed live online (link to register is above). I have been invited to participate in the event and am honored to represent my organizaiton with Cisco. So check it out, there are some very cool things coming right around the corner.

Disclaimer: The views and opinions expressed on this blog are my own and are not endorsed by any person, vendor, or my employer. This is to say the stuff on my blog are done not as an employee but as just another healthcare IT guy.

UCS Upgrade: I/O Path Update 2 (quick)

In my last post regarding the UCS firmware upgrade process I described our proposed upgrade steps for the FEX modules and the Fabric Interconnects. At the time the best information I had gathered indicated (release notes, TAC, etc.) the possibility of one minute interruption of I/O.

One of the great things about coming out to Cisco in San Jose is to be able to talk to the people who build this stuff in the business unit. I will have more in-depth discussions tomorrow, but the quick info is some clarification on the “one minute interruption of I/O”. It turns out this is really only referring to the Ethernet side of the I/O. Your fiber channel storage area network will not have any disruption because of the multipath nature of FC, so no SAN connectivity disruption. Next on the Ethernet side it sounds like if there is a disruption it really comes down to the MAC tables on the fabric interconnects and a few other failover functions. The smaller the MAC tables the quicker the “failover”.

Like I said, I will get more details tomorrow but wanted to get this new info out to everyone. I am feeling pretty good about our upgrade next week.